Introduction to Serverless Architecture in Cloud-based Applications

You can consider serverless computing the next evolutionary step to make things easier for developers. Data centers, virtual machines, Elastic Compute Cloud (EC2), Simple Storage Service, and so on – these were the steps that brought us to today’s notion of serverless architecture.

What does this term encompass? How exactly does it benefit developers? And, more importantly, how can you leverage it in everyday software development? Let’s start with the basics and then pinpoint some benefits and challenges of serverless architecture.

from 25 countries outsourced software development to Relevant

We provide companies with senior tech talent and product development expertise to build world-class software.

What is a serverless architecture?

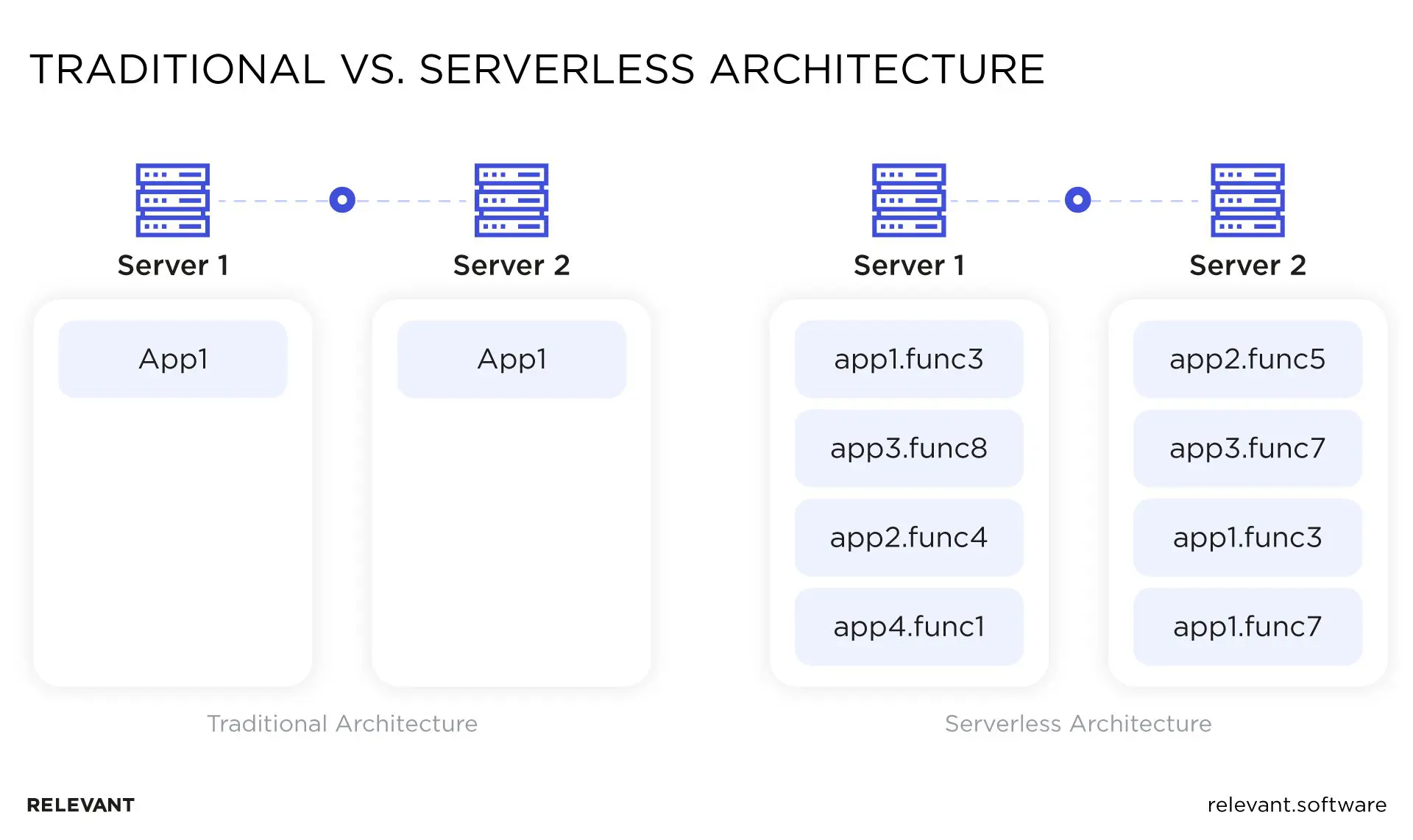

Serverless is a cloud-based code execution model where cloud providers, instead of developers, deal with servers and computing resource management. There are no virtual machines or physical servers: they are deployed automatically in the cloud by vendors.

Cloud providers take care of provisioning, maintaining, and scaling the serverless architecture. What’s more, the serverless architecture allows launching apps as needed: you don’t pay for ‘always-on’ server components to run your app when it’s not being used. Instead, some event triggers app code, and the resources are dynamically allocated for that code. You stop paying as soon as the code is executed.

So, in a nutshell, serverless architecture is a way to build your cloud-based application without managing infrastructure. It eliminates the need for routine tasks like security patches, capacity management, load balancing, scaling, etc.

Still, serverless does not mean there are no servers at all. The term is somewhat elusive. Servers are simply eliminated from the app development since they are managed by the vendors.

An application like this usually consists of single-purpose functions where one function means one action execution. A developer creates a module or function that executes a certain action and sends it to the cloud provider. To update it, a developer uploads a new version and initiates the change. At the same time, the developer integrates pre-built backend services with a serverless architecture.

There is one more thing that can take the infrastructure management burden off developers – containers. While also cloud-based, and allowing breaking and deploying applications into small units, containers are quite different from serverless computing.

Serverless vs. containers

Unlike traditional servers and virtual machines, serverless computing and containers allow developers to build more flexible and scalable apps. Despite these similarities, serverless and containers have quite a few differences.

- Physical machines: while serverless architecture is actually running on servers, but the ones provisioned by the vendors, a specific app or function doesn’t have a particular machine assigned. On the other hand, each container exists on one machine, uses its operating capacity, and can be moved to another one if needed.

- Scalability: with containers, developers have to decide on their number beforehand. In serverless architecture, the backend can be scaled based on demand.

- Cost-efficiency: containers are constantly running, and you have to pay for space even if no one is using an app. For serverless, the code runs only when called, and developers pay for the used capacity only.

- Maintenance: while hosted in the cloud, providers don’t maintain and update containers. The thing with serverless is that, from the developer’s perspective, there’s no backend one needs to manage.

- Deployment: to set up containers, you have to configure system settings, libraries, etc., which takes longer than dealing with a serverless architecture. As soon as the code is uploaded, your serverless app is live.

- Testing: with serverless apps, it’s hard to replicate a backend environment in the local environment, so testing is challenging. Containers, however, can be tested easily.

Both serverless computing and containers significantly reduce infrastructure expenses, but serverless more so. While serverless architecture provides more speed to releases and iterations, containers offer more control over the environment. It is also possible to use hybrid architectures.

Functions as a Service (FaaS)

One more concept we should distinguish from serverless computing is FaaS. We can separate two types of serverless – BaaS (backend-as-a-service) and FaaS (functions-as-a-service), which are overlapping.

BaaS includes apps that use third-party servers to manage server-side logic (the backend is the service). With FaaS, you upload frontend code to a service like AWS Lambda or Google Cloud Functions, and the provider takes care of backend things like provisioning, managing processes, etc. The server-side logic remains in developers’ hands, but it runs in stateless compute containers. FaaS here is your code as a third-party platform that you pay for only when you use it.

FaaS can be used without serverless architecture. You can replace part of your app with the FaaS functionality, but it can increase computing costs when the need to scale arises. Instead, serverless computing offers a broader range of functionality than pay-per-use FaaS options since it encompasses a variety of technologies and is considered more cost-efficient.

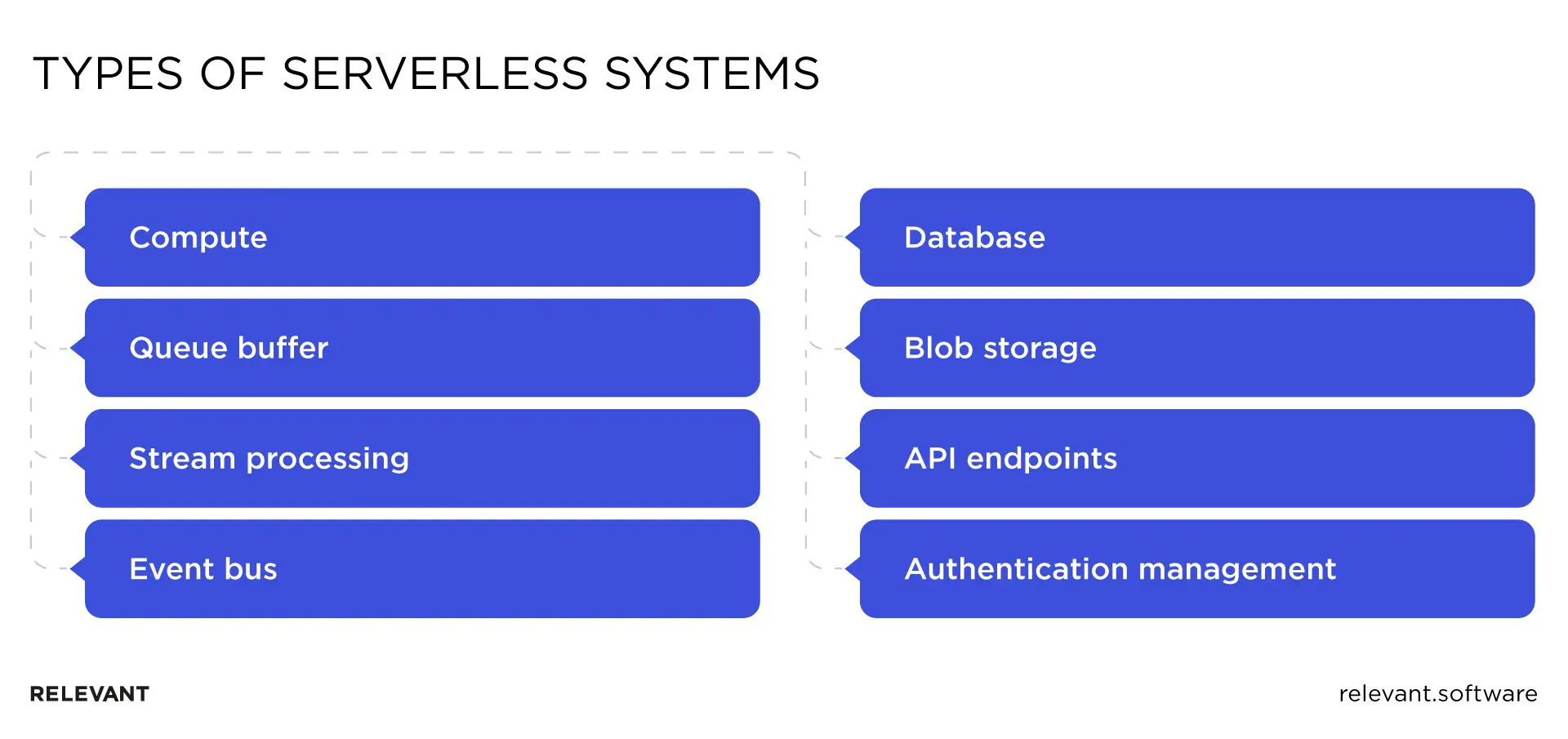

Types of serverless systems

While serverless computing started as FaaS, it includes all sorts of cloud services now. There are eight common types of serverless systems, distinguished due to their primary purpose.

Compute

In a serverless compute service like AWS Lambda, developers can run code on-demand or on-schedule. The FaaS platform will provide containerized runtime only. In addition to supporting the most popular runtimes such as Python, Node.js, .NET, and so on, some platforms support custom runtimes as well.

AWS Lambda allows implementing external API calling, writing data to a database, authenticating a user login, and almost anything else a traditional infrastructure offers.

Queue buffer

Queuing services hold data when it is moved from one part of an app to another. It decouples services with an asynchronous communication process and controls the volume of data. AWS SQS is a service for queuing.

Stream processing

This system receives the flow of data packages and analyzes and processes them in real-time. It can be used as a buffer, but stream processing is more useful for analytics: detecting patterns, applying conditions, and reacting according to circumstances. AWS Kinesis can process video and data streams, data firehose, and data analytics.

Event bus

It allows receiving messages from different sources and delivery to various destinations. The event bus resembles a queue buffer, but it can process multiple topics while a queue works with particular messages only. You can set custom rules for delivering messages to subscribers. AWS EventBridge event bus has numerous third-party integrations, from customer support to security suits.

Database

The serverless database space range is vast:

- SQL (Amazon Aurora Serverless) for combinations of transactional and analytical access patterns, with data that presents many-to-many relationships

- Graph (Amazon Cloud Directory) for complex data relationships

- Analytics (Amazon Athena) to provide analytical insights when applied in combination with another database

- NoSQL (Amazon DynamoDB) for large datasets and write-intensive applications.

Blob storage

Blob storage, such as AWS S3, is used to store text files, videos, or images. For big data analysis, it is integrated with Lambda and Amazon Athena.

API endpoints

The most common models for API implementation are REST and Graph. REST allows two systems to communicate with the help of HTTP methods. It requires knowing the endpoints, parameters, and filters in advance. For REST architecture, there is an AWS API Gateway. Graph API allows combining filters, selecting which data points to return, aggregating information before retrieval, etc. For Graph, there is the AWS AppSync service.

Authentication management

A serverless user management system allows apps to authenticate, authorize, and manage users applying high-level security standards. AWS Cognito provides the level of security that can be replicated by an extended app development team only.

Here’s an example of how different types of serverless systems can be combined in one ecommerce app:

Managing a serverless environment with AWS Lambda

You can choose any serverless vendor: Google’s Cloud Functions, Microsoft’s Azure Functions, Apache OpenWhisk, Spring Cloud Functions, or Fn Project. But at Relevant, we prefer working with AWS Lambda. Let’s learn how to manage a serverless environment with this FaaS.

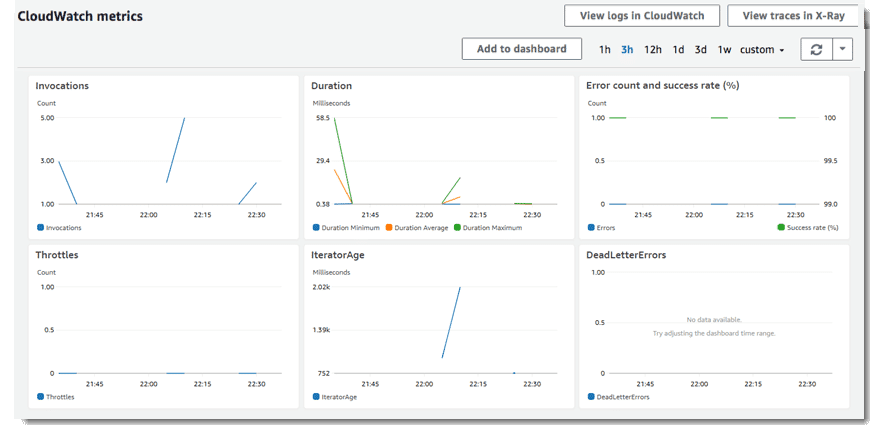

The hidden danger of using AWS Lambda is the difficulty of managing a serverless environment. With many small functions running concurrently, you get a very complex system in the end. That’s why you need to arm your development team with tools for monitoring functions and the system’s performance. The metrics you should pay attention to include:

- The total number of requests

- Duration of the request and its latency

- Error count and success rates

- Throttles

You can monitor each function with the help of Amazon CloudWatch. The service automatically stores and displays metrics through the AWS Lambda console. Here’s what the monitoring page looks like.

Apart from CloudWatch, you can use AWS Lambda with Amazon X-Ray. The tool helps you trace requests to identify performance issues.

AWS Lambda pricing

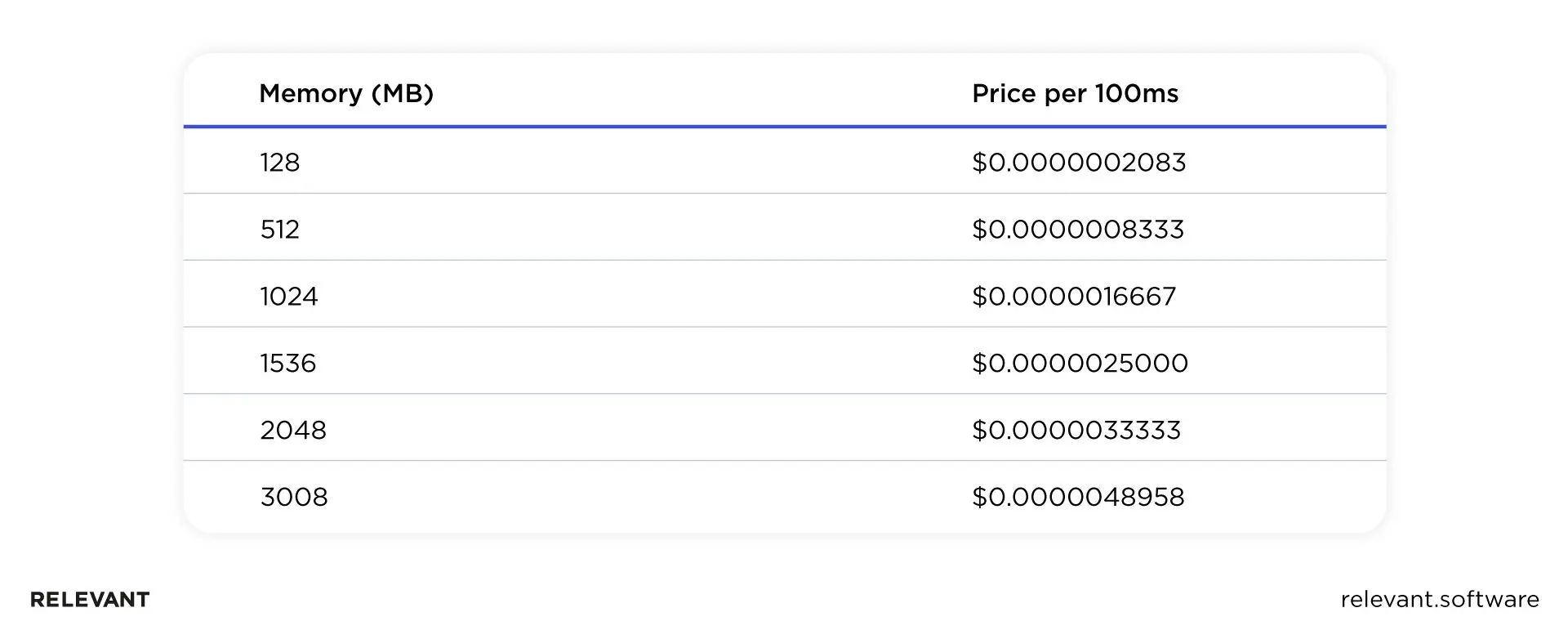

With AWS Lambda, you pay only for the resources you use. In other words, the service charges you only for the duration of code execution and the number of requests for your functions. AWS Lambda charges $0.20 for one million requests and $0.00001667 for every GB-second used.

A request is each code execution triggered by the client or other AWS services. So, the more requests across all the functions happen, the more you’ll have to pay. As for the duration, it spans the time the code starts executing and up to the time it returns or is terminated. The duration is measured in milliseconds rounded up to 100ms. You’d have to say, execution time depends on many factors, such as third-party dependencies and language runtime.

In the end, the price also depends on the amount of memory you need for the function allocation. Take a look at how the price for 100ms corresponds to different amounts of memory used:

The good news is that AWS Lambda has a free usage tier that doesn’t expire with time. It includes 1M free requests per month, and 400,000 GB-seconds of compute time per month.

Maintaining serverless applications

If you don’t want system evolution and technical debt to cause you trouble, design your serverless architecture with maintainability in mind. Pay attention to the following system’s characteristics:

- Analysability. Defines how easy it is to diagnose and test the product’s components.

- Modifiability. Shows whether your code is modifiable without any side-effects on the whole system.

- Testability. Represents how easy it is to execute tests and validate code modifications.

- Modularity. Shows if a system is composed of independent components and if changes to one of them affect the other. Luckily, because serverless apps usually have a low module coupling, their components are discrete.

- Deployment. Means the ability to easily deploy or install an update.

Scaling serverless applications

For a serverless app, scaling is usually automatic and managed by a cloud vendor. And yes, scaling both up and down is possible. Which means you don’t have to worry about the increased user traffic and server load. Nor do you have to worry about over-provisioning if your serverless application handles only occasional requests. You always use the exact amount of resources you need. Say goodbye to idle servers!

Whenever the volume of traffic changes, your serverless app will auto-scale instantly. But the trick here is that all vendors have boundaries due to the RAM, CPU, and I/O operation limits. So beware of those. Take AWS Lambda, for instance: it offers up to 3GB on RAM.

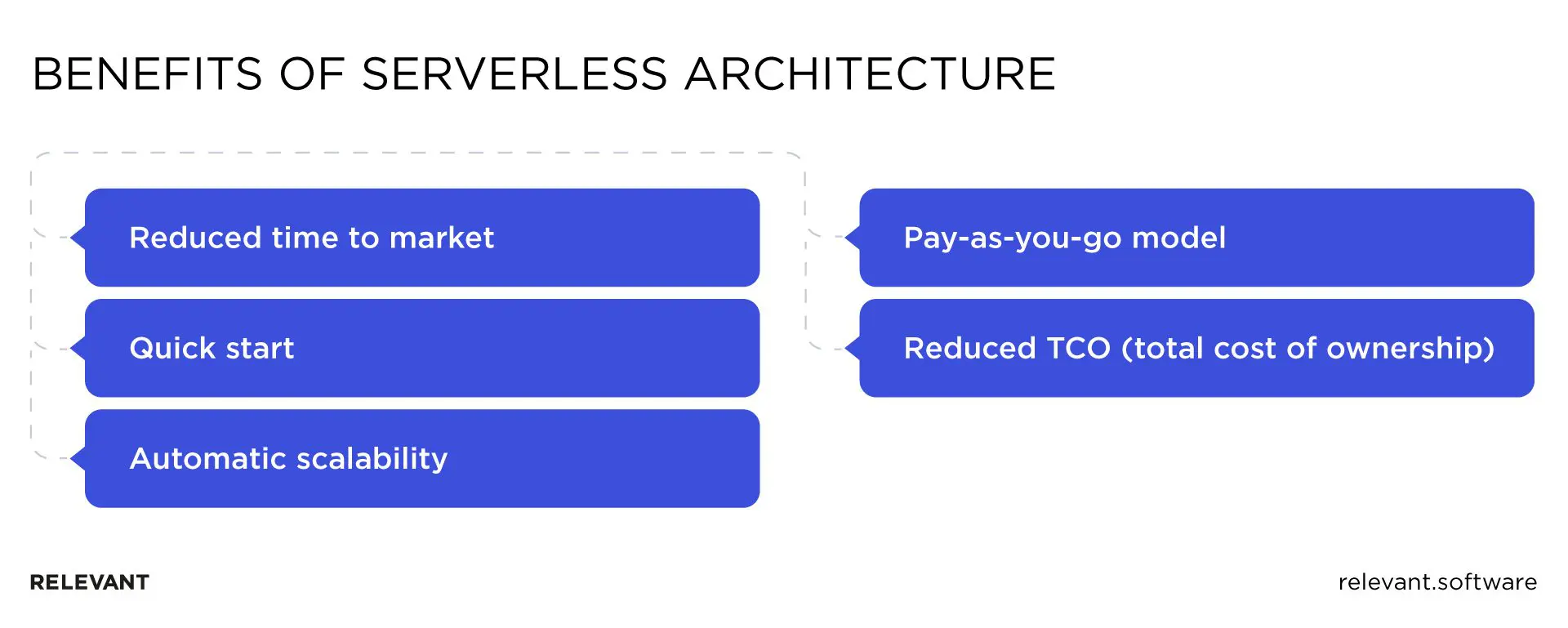

Benefits of serverless architecture

We’ve already mentioned that serverless is much more convenient and offers more functionality than traditional server-based infrastructures. Developers can make releases faster, scale better, and reduce infrastructure expenses. Let’s explore these points in more detail.

Reduced time to market

Developers can focus on business logic since infrastructure provisioning, management, and scaling are not their tasks anymore. Also, the function code is faster to write as it is designed to do one thing at a time. Updates are quick and easy as well: the new code is uploaded, and changes are initiated through calling API, without deploying the entire application.

Quick start

Serverless architecture includes BaaS building blocks for common functionalities – the types of serverless systems mentioned in the previous paragraph. So, you have ready-made solutions for databases, file storage, API, etc., which help you integrate your app with backend services and instantly reach system stability.

Automatic scalability

Serverless architectures can scale both up and down according to the demand for the specific functions. The providers ensure horizontal autoscaling, so any unexpected traffic spikes are accounted for, and won’t put your system down.

Pay-as-you-go model

We’ve already mentioned this benefit: developers pay only for the number of resources they use. There’s also an offering from AWS Lambda for a free usage tier that includes 1M requests per month and 400,000 GB-seconds of compute time.

Reduced TCO (total cost of ownership)

This pricing model boils down to reduced operational costs, as you pay for the executed code only (which is milliseconds), and it means around 30% monthly savings on memory usage.

With all these advantages, there are still some limitations to a serverless architecture.

Serverless challenges and solutions

Despite the fast adoption of serverless, people still often choose server-centric infrastructures. The main concerns around serverless app development are function timeouts, minimal time-lag, costly cloud migration, privacy, and vendor lock-in. They fall under the following groups.

Performance

Running on Lambda, serverless apps may have several performance issues:

- Running a function inside VPC can result in a significant cold time start, depending on the language. You can minimize the impact on users by analyzing cold start times frequency, duration, and count with a service like Dashbird.

- The distance of the function from the user influences the response time. Via Lambda@Edge, you can deploy a function to the Amazon CloudFront and automatically host the function in multiple locations.

- Request path can affect latency: some requests require actions from several microservices and multiple databases, e.g., several Lambda functions, API Gateway, databases, external services. It makes sense to think about the architecture patterns beforehand.

- Runtime configurations: Lambda has a predefined set of configurations and memory sizes to choose from. And while lower memory and CPU settings are cheaper, it can influence the execution of some tasks, so define how much CPU you need to allocate to a specific function.

Monitoring and DevOps

Since serverless infrastructures span across multiple services and functions, issue detection and debugging becomes a real challenge. There are solutions for gathering logs and metrics, but there’s still a lot of data to analyze. Specific third-party tools like Dashbird, Thundra, etc. can help you out here: you can automate visualization, set alerts, and get some insights into the root of the problem and release pipelines.

Security

Serverless architectures are allocated on public cloud environments and have significantly more surface area than traditional apps. Therefore, watch out for functions with overly generous IAM policies, outdated libraries, and unauthorized requests.

Other issues

Migrating to the cloud and migrating from one cloud to another is quite a challenge since serverless is specific to every provider. While there are tools like the Serverless Framework, managing multi-cloud workloads requires significant effort. On the other hand, cloud providers offer rich and varied services, so the need to switch a provider may never arise.

One more issue is that your expenses will grow with the number of levels you need to manage. Containers may turn out less expensive when you need to host multiple layers of complex logic, or something that runs 24/7, with consistent workloads. In this case, having everything in one container will make sense.

Speaking of cost, let’s have a closer look at how serverless cloud services are billed.

The economics of serverless computing

Pay-per-use is a standard model for all cloud providers. But there’s more to that. Let’s take AWS Lambda and Azure Functions, for example: the cost per function invocation is 20 cents for one million invocations. Sounds cheap, right? However, this is not the main element of the total cost.

Since the invocation of functions requires significant computing resources, FaaS providers also charge for the combination of allocated memory and function execution time. For AWS Lambda, it’s $0.00001667 for every GB-second used. The amount of memory is configurable, therefore the price of function execution depends on configuration.

Even considering the additional expenses, the use of Lambda is still very cheap: one million invocations with the average time of 500 ms and 128 MB allocated memory will cost you around $1.25. If run continuously, this function will cost you nearly $6 per month. Furthermore, in an attempt to attract more users, AWS Lambda and Azure Functions offer generous free tiers to a function using 128 MB of memory running 24/7 for a month.

Still, serverless architecture is not always a money saver. Certain workloads require considerable computing resources, and functions should be validated against the FaaS environment limitations.

Also, we recommend comparing the price of serverless functions from different providers here. Historically, Amazon was the first cloud provider to offer a FaaS platform, AWS Lambda. Other providers you can consider are Google, with Cloud Functions, and Microsoft, with Azure Functions, as well as open-source alternatives such as Apache OpenWhisk, Spring Cloud Functions, and Fn Project.

Let Relevant Software help you with serverless apps

At Relevant, we help companies around the world build their products and scale engineering teams. Here’s how we can help with creating a serverless application:

- We start by gathering requirements.

- Then, we identify a suitable technology stack for your serverless app.

- Next, we provide you with a development plan and finalize our estimates.

- We move to designing the system’s architecture and writing documentation.

- Finally, we set up the environment for data storage. For that matter, we can use Amazon S3 bucket or Amazon Redshift data warehouse cluster. We recommend using AWS Lambda on the back of your app. To do that, we have to develop functions for your website and deploy them.

Let’s look at our two projects where we successfully implemented serverless architecture on AWS.

24OnOff

24OnOff is a platform that minimizes paperwork for construction companies. It helps them with time tracking and project management. We divided the system’s features into separate modules and created a serverless app with AWS. Also, we set up a monitoring system that covers all endpoints to ensure the stability of HTTP requests. Additionally, it tracks server resources like CPU usage, memory consumption, network, etc. to improve capacity planning and reliability.

FirstHomeCoach

FirstHomeCoach is a SaaS platform that helps UK citizens buy property. It connects users with advisors who help them secure a mortgage, get insurance, and handle all legal paperwork. Our team designed the app’s serverless architecture and built the system from scratch. We established microservices, which created the necessary isolation between the application server and business processes. To speed up the development, we enabled static typing. Also, we developed a Node.js-based cluster module that allows the app to use the full power of the CPU.

Relevant Software’s pick

At Relevant, we opt for AWS serverless architecture because of all the benefits we’ve mentioned: it helps to start quickly, gain system stability instantly, and reduce time to market significantly. It scales automatically, costs less, and our clients love the TCO of their serverless applications.

We know a thing or two about developing serverless apps, so drop us a line if you need help or want to find out more about our expertise.

[rws-cta id=”8275″]

Hand-selected developers to fit your needs at scale! Let’s build a first-class custom product together.