AI in Cybersecurity Examples: How Technologies Can Protect the Digital World

It’s sad to say, but today’s businesses encounter numerous difficulties in their security routine. From daring cyber attackers to the explosion of data and increasingly complex infrastructure, all of this impedes their security teams’ efforts to protect data, control user access, and promptly identify and address security breaches. To efficiently safeguard digital assets, businesses must pivot from a solely human-centered approach and fully integrate AI technology as a collaborative partner (we’ll prove this through AI in cybersecurity examples below).

However, many of them still underestimate AI`s potential. So, how can we, as an experienced company that provides AI development services, remain on the sidelines? Definitely not, so we have prepared this guide to highlight the power of AI in cybersecurity.

from 25 countries outsourced software development to Relevant

We provide companies with senior tech talent and product development expertise to build world-class software.

The Strategic Role of AI in Bolstering Cybersecurity Measures

In cybersecurity, the terms artificial intelligence (AI), machine learning (ML), and deep learning (DL) refer to different data processing and analysis layers. At its essence, AI facilitates automated, intelligent decision-making. Within AI, machine learning focuses on algorithms that learn from data to make predictions and provide recommendations. In cybersecurity, that means algorithms can identify unusual network traffic patterns—potential signs of a security breach.

Deep learning, which drills down even further, uses complex neural networks to process data in ways similar to the human brain. This process is particularly good at handling large, unstructured data sets like images and text, which are conventional in security settings. As a case in point of AI in cybersecurity examples, DL methods dissect malware properties that simpler algorithms might miss, which leads to faster and more precise threat detection.

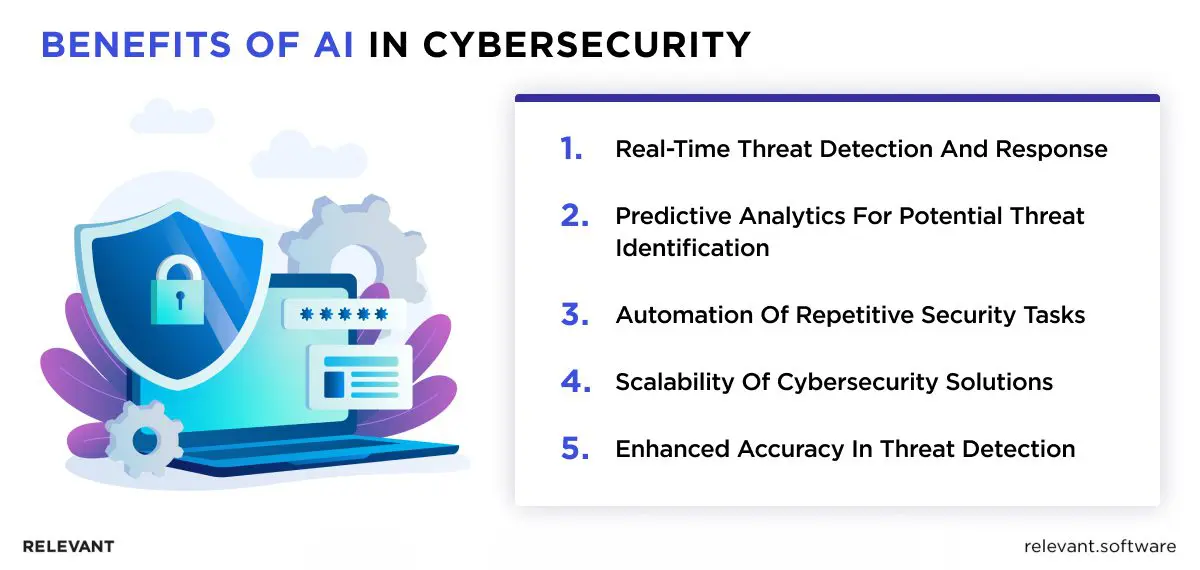

Key Benefits of AI in Cybersecurity

Initially, AI relied on rule-based systems grounded on observations to monitor network traffic and user behaviors. Human operators manually applied these rules to track activities, although this approach had notable limitations.

In the late 20th century, the technology evolved into a more effective tool for cybersecurity. Advancements in machine learning enabled AI to establish its own rule thresholds, which reduces the need for extensive human intervention. Moreover, AI has gained the capability to incorporate DL algorithms into threat detection, which allows it to develop more efficient strategies for potential threat identification.

To date, AI has demonstrated its value to cybersecurity teams through benefits such as:

Benefit 1. Real-time Threat Detection and Response

Artificial intelligence enhances cybersecurity by detecting and responding to threats as they occur. This real-time capability allows organizations to address vulnerabilities immediately, which reduces the potential damage from cyber attacks. AI systems analyze data continuously, which helps identify unusual activities that may signal a security breach.

Benefit 2. Predictive Analytics for Potential Threat Identification

AI uses predictive analytics to foresee potential threats before they escalate. AI systems can examine historical data and identify patterns to predict likely attack vectors and alert AI security teams. This proactive approach helps prepare defenses against possible attacks that substantially decrease risk exposure.

Benefit 3. Automation of Repetitive Security Tasks

AI automates repetitive tasks in cybersecurity operations, such as log analysis, which traditionally requires substantial human effort. This automation allows security staff to concentrate on more strategic tasks that require human judgment. As a result, cybersecurity teams become more efficient and can manage their resources better, addressing complex issues that AI cannot handle alone.

Benefit 4. Scalability of Cybersecurity Solutions

AI enables cybersecurity solutions to scale as an organization grows. As data volumes and transaction rates increase, AI systems can adjust and manage enhanced security measures without the need for proportional increases in human resources. This scalability ensures that companies can leverage the benefits of AI in cyber security and maintain robust security measures as their digital footprint expands.

Benefit 5. Enhanced Accuracy in Threat Detection

AI-driven cybersecurity tools greatly improve threat detection accuracy. ML algorithms enable AI cybersecurity systems to distinguish real threats from harmless anomalies effectively. This precision prevents the waste of resources on false alarms and enables security teams to concentrate their efforts on actual dangers.

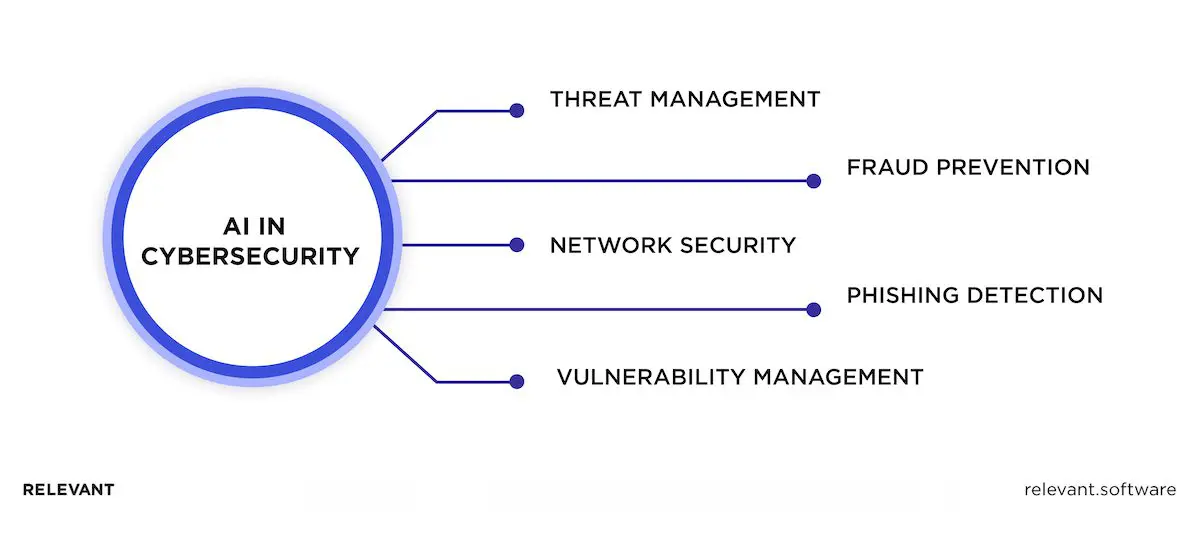

Examples of AI in Cybersecurity Applications

Without any doubt, cybersecurity has become essential for every organization, irrespective of its size or sector. It serves as a next-generation shield for digital assets and a solid response to increasingly complex cyber threats. But how is AI used in cyber security? Here are some examples of AI in cyber security you might have missed:

Threat Detection and Management

AI-driven threat intelligence systems represent a significant advancement in the continuous effort to protect data infrastructures. This powerful approach leverages three key capabilities:

- AI-driven threat intelligence: AI can analyze vast amounts of data in real time, which helps to identify anomalies and potential threats that might escape human attention.

- Real-time monitoring and anomaly detection: Suspicious login attempts and unusual network traffic patterns can trigger AI-powered risk analysis, which generates detailed incident summaries and automates responses. This capability speeds up alert investigations and triage processes by an average of 55%.

- Automated incident response: When AI identifies a threat, it can trigger predefined actions to contain the damage, such as isolating infected devices or blocking malicious traffic.

Real-life AI in cybersecurity examples: The Federal Government employs CrowdStrike’s Falcon AI platform to analyze billions of events in real-time, which offers protection against all types of attacks, from commodity malware to sophisticated state-sponsored intrusions. Its AI models, trained on a vast dataset, allow the platform to detect malicious activities and prevent breaches by identifying suspicious patterns that humans might overlook.

Fraud Detection and Prevention

Fraud remains a constant threat in numerous industries, from finance and e-commerce to healthcare and insurance. It results in significant financial losses and damages trust. Fortunately, AI cybersecurity software can help with fraud detection issues through the:

- Automated Anomaly Detection: AI algorithms in fraud detection systems can learn to recognize signs of fraudulent activity by transaction patterns control. These signs may include atypical transaction amounts, frequent transactions from the same device, or purchases from different locations within a brief period. The system flags the transaction for further review when an anomaly is detected.

- Behavioral Analysis: AI technology can track customer behavior over time to spot any unusual activity. For instance, if a customer who typically makes modest purchases suddenly starts to buy expensive items, the AI system can mark these transactions as potentially suspicious.

- Natural Language Processing (NLP): AI models can utilize NLP to scrutinize customer communications, such as emails or chat messages, for signs of fraud. For instance, if customers update their account details and request a password reset shortly after, the AI system may recognize this pattern as a potential fraud risk.

Real-life examples of AI in cybersecurity: American Express early started AI software development of expert systems for fraud detection. It utilizes AI to analyze customer transactions in real-time and identify suspicious activity such as unusual spending patterns, location inconsistencies, and known fraudulent activities. This helps block fraudulent transactions and protect customers from financial losses.

Network Security

AI cybersecurity models can balance security with user experience, analyze the risk of each login attempt, and verify users through behavioral data. This approach simplifies access for verified users and can reduce fraud costs by up to 90%. AI cybersecurity fortifies networks, particularly through Intrusion Detection Systems (IDS) deployment, and safeguards Internet of Things (IoT) devices.

- Intrusion Detection Systems (IDS) Powered by AI: Traditional IDS rely on pre-defined rules to identify threats. AI-powered IDS can analyze network traffic for more nuanced patterns of malicious activity, even against zero-day attacks. This enhances the effectiveness of network security and reduces the risk of breaches.

- AI in Securing IoT Devices: The Internet of Things (IoT) is expanding quickly, yet many of these devices suffer from poor security protocols. Fortunately, AI cybersecurity models can monitor IoT systems for suspicious activity and pinpoint potential vulnerabilities.

Real-life AI in cybersecurity examples: Cisco recently unveiled a groundbreaking Identity Intelligence solution within the Cisco Security Cloud, enhanced by new AI-powered security features. It enables users to manage their entire identity base, secure accounts at risk, eliminate unnecessary and risky privileges, detect behavioral anomalies, and block high-risk access attempts.

Related articles – How to create an AI

Vulnerability Management

CrowdStrike’s 2023 Global Threat Report reveals that intrusion activities without malware comprise as much as 71% of all detections recorded on the CrowdStrike Threat Graph. Forty-seven percent of security breaches were due to vulnerabilities that were not patched.

Additionally, 56% of organizations handle the remediation of security vulnerabilities manually.

They may not yet know that manual patching methods fall short: 20% of endpoints remain vulnerable after remediation because they’re not fully updated with all patches. And they definitely need to know that AI could cope with this much better by:

- Identification, Categorization, and Prioritization of Vulnerabilities: Manual vulnerability scanning is often slow and susceptible to errors. AI algorithms, however, can analyze large datasets such as security reports, threat intelligence feeds, and system configurations to identify vulnerabilities more accurately and efficiently.

- Predictive Analytics for Foresight: AI does more than simply identify existing vulnerabilities. ML models analyze historical data on exploit trends and attacker behavior to predict which vulnerabilities attackers will likely target next. That enables security teams to prioritize patch-up and mitigation efforts, and to focus on the most critical risks.

Real-life examples of AI in cybersecurity: JPMorgan Chase, a leading financial institution, utilizes AI-powered vulnerability management solutions. These tools consistently monitor extensive networks for vulnerabilities and prioritize patching efforts based on the severity of the vulnerability and the probability of exploitation. This proactive approach helps them realize the benefits of AI in cyber security and minimize their security risks.

Phishing Detection

As unfortunate as it is, phishing attacks attempt to get users to reveal sensitive information or click malicious links. AI algorithms can significantly enhance phishing detection by:

- Phishing Websites and Email Identification: With Natural Language Processing, AI can analyze website content, email text, and sender information to identify subtle red flags that might elude human detection. This capability includes analysis of email headers for inconsistencies, spotting suspicious URLs, and detection of unusual language patterns in phishing attempts.

- Machine Learning Models for Email Filtering: ML models can be trained on vast datasets of phishing and legitimate emails. Over time, these models can learn to identify hackers’ tactics with exceptional accuracy. This improvement in email filtering helps block suspicious emails before they ever reach user inboxes.

Real-life examples of AI in cybersecurity: Microsoft utilizes AI-powered email filtering systems in its Office 365 suite. These systems leverage ML models to analyze emails and identify phishing attempts with high accuracy.

When Should AI Not Be Used in Cyber Security?

With AI and ML, cybersecurity software products can closely monitor ongoing behavioral patterns within workflows, evaluate potential threats, and promptly alert the relevant team based on these assessments. But it’s not a one-size-fits-all solution. There are specific situations where relying on AI might not be advisable:

1. Limited or Biased Data

AI models require large amounts of relevant and high-quality data to function effectively. AI deployment in environments where data is sparse, unstructured, or highly sensitive might lead to inaccuracies or compliance issues.

- The Garbage In, Garbage Out Problem: AI models in cybersecurity are only as good as the data they train on. Limited datasets may not provide enough information to learn effectively, which makes the AI unable to generalize and detect new malware variants. For example, an attempt to train an AI to recognize malware with just a few examples would likely fail to identify newer, evolved threats.

- Data Bias: Real-world data often carries inherent societal biases. An AI model trained on such biased data might inadvertently learn to discriminate against certain types of network traffic or wrongly flag innocent users as threats. This can result in unfair security measures and diminish trust in the system.

- Data Relevance: The volume of data is not the only concern—its relevance is equally important. For example, AI trained on generic network traffic may not suffice in environments with specific needs, such as the healthcare sector, which requires adherence to stringent data security protocols. This mismatch can lead to ineffective security measures in industries where precise and tailored AI applications are crucial.

2. Unexplained Decisions

Many AI and cybersecurity models, particularly deep learning ones, often operate as “black boxes.” While these models can detect threats with impressive accuracy, the reasons behind their decisions frequently remain opaque. This lack of transparency presents several challenges:

- Trust Issues: When the rationale for decisions is not clear, it becomes difficult for security teams and stakeholders to trust the AI’s judgments. This skepticism can hinder the adoption of AI technologies and limit their use in critical security operations.

- Limited Human Oversight: These systems’ “black box” nature makes it challenging for human operators to oversee and intervene effectively. When security professionals cannot understand or predict how an AI model makes its decisions, they are less able to manage and control the system’s operations, which potentially leads to overlooked security breaches or inappropriate responses to false alarms.

3. Zero-Day Attacks

As already mentioned, AI can identify patterns and spot known threats. However, cybercriminals constantly develop new attack methods, known as zero-day attacks. These attacks exploit vulnerabilities that haven’t been patched or identified yet. By definition, AI models trained on existing data wouldn’t be able to recognize these novel threats.

4. Strict Regulatory and Compliance Requirements

In industries with strict regulatory requirements, such as finance or healthcare, the use of AI in cybersecurity can complicate compliance. Regulations may require transparent processes and decision-making trails that AI cannot provide, which necessitates more traditional approaches.

5. Risk of Over-reliance

Over-reliance on AI can lead to a false sense of security. AI cyber security systems are susceptible to failures, can be tampered with, or may face situations for which they are not trained. It’s important to adopt a balanced approach that includes adequate human oversight to manage and reduce these risks effectively.

Here are some additional factors to consider:

- Shortage of cybersecurity talents: Organizations posed with the scarcity of skilled cybersecurity professionals to keep pace with rapidly evolving threats. However, the stark truth is that even an influx of experts might not suffice to outpace cyber attackers’ cunning.

- Cost and Expertise: Robust AI and cybersecurity solutions require significant infrastructure, data, and investment in skilled personnel. Traditional security tools might be a more cost-effective option for smaller organizations with limited resources.

- Explainability vs. Accuracy: There’s a trade-off between explainability and accuracy in some AI models. Simpler, more transparent models might be less accurate, while precise, highly accurate models might be opaque in their reasoning. The right balance depends on your specific security needs.

In conclusion, AI is a powerful tool for cybersecurity, but it’s crucial to understand its limitations. If you carefully consider the abovementioned factors, you can make informed decisions about when and how to leverage AI for optimal security outcomes.

Related – Choosing the Right Language for AI: What’s Hot, What’s Not

Crucial Considerations for AI Implementation in Cybersecurity

According to IDC, one in four AI projects typically fails. This statistic highlights challenges that we, as professionals, need to address to ensure the success of AI initiatives. This table gives a clear overview of the key challenges in AI/ML implementation that we consider the most important.

| Challenge Number | Description |

| 1. Non-aligned internal processes | Despite investments in security tools and platforms, many companies still need to overcome security hurdles due to inadequate internal process improvements and a lack of cultural change. This prevents full utilization of security operation centers, and fragmented processes and lack of automation weaken defenses against cybercriminals. |

| 2. Decoupling of storage systems | Organizations often fail to use data broker tools like RabbitQ and Kafka for analytics outside the system, not separating storage systems from compute layers. This inefficiency hampers AI effectiveness and increases vendor lock-in risks with changes in product or platform. |

| 3. The issue of malware signatures | Security teams use signatures (like fingerprints) of malicious code to identify and alert about malware. However, as malware evolves, old signatures become ineffective, especially if the script changes, rendering them useless unless pre-established by security teams. |

| 4. The complexity of data encryption | Advanced encryption methods complicate the isolation of threats. Deep packet inspection (DPI), used to monitor external traffic, struggles with complexity and can be exploited by hackers. It places additional strain on firewalls and slows down infrastructure. |

| 5. Choosing the right AI use cases | AI and cybersecurity projects often fail because organizations try to implement AI company-wide without focusing on specific, manageable use cases. That overlooks the importance of learning from smaller, targeted initiatives to avoid missed opportunities and project failures. |

| 6. Lack of Labeled Data | Typically, cybersecurity logs lack labels; for instance, there’s no indication whether a document download is malicious or if a login is legitimate. Due to this shortage, cybersecurity detection often depends on unsupervised learning techniques like clustering for anomaly detection, which don’t need labels but have notable limitations. |

7. Adversarial attacks | AI greatly bolsters cybersecurity defenses, but savvy adversaries can craft methods to bypass AI-driven security systems. These attacks entail altering input data to trick AI models into making errors, such as misclassifications or overlooking threats. Building strong defenses against these tactics continues to be a major challenge for experts in the field. |

Effective AI cyber security hinges on mature processes, cultural alignment, skilled teams, and the selection of appropriate use cases. To achieve this, security teams should conduct internal audits to identify and prioritize vulnerable areas, starting with data filtering to isolate untrusted sources. However, this isn’t a fixed rule; the key is to proceed thoughtfully when integrating AI into cybersecurity practices.

AI in Cybersecurity Examples: Final Words

While artificial intelligence significantly enhances cybersecurity efforts by sifting through and analyzing enormous amounts of data to identify patterns and generate insights, human professionals’ strategic input, and expertise remain indispensable. That’s why all forward-thinking companies try to hire AI engineers with a background in cybersecurity to bridge both complex worlds. And we are ready to provide you with such AI cybersecurity experts. Cooperation with Relevant Software means securing a team that shields your digital endeavors and ensures peace of mind.

Contact us to make security as it should be—smart, responsive, and built around you.

Hand-selected developers to fit your needs at scale! Let’s build a first-class custom product together.