How to Use AI in Software Development (Hint: It Stops at MVP, Not Scaling)

The last few years have made it easy to believe that artificial intelligence will build, test, and launch software while everyone else sips coffee and waits for deployment to finish. Tools now write code, design screens, test features, and even suggest roadmaps. It can feel as if the future of software development sits one clever prompt away from “no developers needed.” Anyone who has tried this in a real project knows how quickly that illusion breaks. AI in software development works brilliantly for early experiments, prototypes, and MVPs.

Problems appear when a product meets real users and must handle scale, regulation, and constant change. AI does not clear architecture debt, cover messy edge cases, or resolve hard product trade-offs. It accelerates discovery and delivery, yet long-term engineering still rests on structure, discipline, and a clear technical strategy.

from 25 countries outsourced software development to Relevant

We provide companies with senior tech talent and product development expertise to build world-class software.

This article looks at what AI actually does well in software development and where the effect stops. Drawing on real projects from our AI software development practice at Relevant Software, we will show how teams use AI to validate ideas faster, shorten time-to-market, and design smarter MVPs. We will also explain why human judgment, experience, and ownership still anchor every successful scale-up.

The AI hype in software development

Before getting lost in statistics about how many developers already “use AI,” it helps to see why this hype exists in the first place. The attraction is obvious: shorter cycles, smaller teams, and code that writes itself, or at least looks as it does in the demos. The excitement around AI for software development has created both optimism and confusion across startups and enterprises alike.

The role of AI in software development evolved from a helpful assistant to a headline act. Every week, a new startup promises an AI that “builds entire applications in minutes.” The demos are slick, the code snippets neat, and the generated dashboards shiny. Yet behind the curtain, someone still reviews architecture, fixes API calls, and ensures compliance before launch.

The hype deserves context, not cynicism. It’s a sign that engineering automation has matured enough to save real time and money. But like every technological leap, it works best when guided by clarity, not wishful thinking.

Why does everyone want AI to build their next product

If every founder and CTO seems obsessed with AI-built software, it’s because the sales pitch sounds irresistible: faster development, fewer costs, and instant prototypes. Who wouldn’t want to replace months of iteration with a single day of “AI magic”?

In theory, the benefits of AI in software development promise exactly that. Large language models draft user flows, generate UI mockups, write boilerplate code, and even run test cases. With enough prompts, an entire MVP can appear overnight. The result feels almost unfair until the demo ends and the debugging begins.

Reality reminds us that AI in product development accelerates creation, not comprehension. It can model what good code looks like, but it cannot decide what good means for a specific business case or user journey. That judgment still belongs to human engineers and product strategists. Artificial intelligence delivers speed, but only when the direction is already clear.

We’ve seen it firsthand while building early versions for clients at our MVP development company. AI tools reduce friction in ideation, data structuring, and low-level coding. They make early versions cheaper and easier to test. But when the project grows into a complex product with live users, those same shortcuts demand careful refactoring.

So, yes, AI for MVP development can help you build your next product, at least the first draft. Just don’t let it choose the roadmap, or you might end up with a brilliant prototype that collapses under the weight of reality.

The confusion between AI as a tool vs. AI as a builder

One of the biggest missteps we see when product owners lean into AI is treating it as the builder, not the accelerator.

Misconception: “AI can handle the whole development process – write features, test them, deploy them, maintain them.”

Reality: AI assists certain tasks, but someone still needs to design the product, direct the architecture, validate assumptions, and handle the messy parts.

Let’s break this down:

| Role of AI | What it does well | What it doesn’t replace |

| AI as a tool | Generate boilerplate code, suggest UI layouts, automate simple tests, and code-review suggestions | Strategic design, architectural decisions, complex bug fixing, and team collaboration |

| AI as a builder | The notion that you feed it a requirement and get production-ready software with no human oversight | Team communication, evolving feature set, long-term upkeep, handling unknowns |

The confusion arises because marketing messages blur the line: “AI will build your app in hours.” Without careful framing, teams expect overnight miracles, and then hit scaling constraints.

We should instead think of AI as a turbo-charger, not the engine. It gives your project a powerful start, helping you reach an MVP quickly, just like an electric race car getting a boost off the line. Once you reach highway speed during scaling and maintenance, you still need solid engineering, such as suspension, brakes, and steering.

Where AI adds greatest value in software development

After the hype cools and the press releases fade, the real question emerges: where and how to use AI in software development? The answer is not “everywhere.” It thrives in specific moments of the software development lifecycle; those that benefit from fast analysis, structured problem-solving, and the automation of repetitive tasks. When used correctly, AI strengthens development teams, improves code quality, and unlocks new ways to gain business value without losing human control.

Let’s look at where AI integration fits naturally and where human expertise still defines the outcome.

Early discovery and research

Every product starts with uncertainty: unclear market fit, too many user opinions, and incomplete data. AI cuts through this clutter with evidence instead of guesswork. Machine learning tools parse customer reviews, trend reports, and usage data to uncover unmet needs or patterns no analyst could spot overnight.

In practice, AI-driven research accelerates requirement gathering and trims weeks off early validation. A model can cluster user pain points across forums, map competitors’ strengths, and highlight opportunities worth testing. Teams get a sharper focus before design even begins.

It’s the phase where AI for software development acts like a microscope, enhancing problem-solving and reducing risk. It doesn’t decide what to build, but it ensures the team builds the right thing.

Project estimation and planning

Few stages in the software development process cause as much debate as estimation. Spreadsheets multiply, project management meetings drag on, and uncertainty grows. Here, AI introduces order and consistency.

At Relevant Software, our engineers built the AI Estimator, a tool trained on years of software engineering data. It compares requirements with previous builds, calculates cost and delivery scenarios, and suggests optimal resource allocation. What once took multiple workshops now takes a single session.

This approach aligns with best practices in modern software development — less guesswork, more insight. It doesn’t replace human expertise but amplifies it, allowing each software developer to focus on architecture and risk analysis rather than repetitive arithmetic. AI transforms estimation from debate into decision, providing clarity that supports continuous learning and continuous integration.

AI-assisted prototyping and code generation

The first lines of code often decide how fast an idea turns into a testable product. With AI, that start becomes dramatically faster. Tools like Copilot, ChatGPT, and internal AI agents automate repetitive code creation, recommend API patterns, and help translate rough logic into functional prototypes. GitHub’s recent study reports that developers complete tasks up to 55 % faster with AI-assisted coding.

Still, their genius has limits. AI handles scaffolding, not structure. It can generate front-end views or simple data models, but struggles with complex dependencies or scaling logic. That’s why experienced engineers treat AI outputs as material, not architecture.

Used wisely, AI-assisted software development transforms speed into learning. Teams reach the MVP stage faster, test real behavior sooner, and refine based on proof, not assumption. The gain is not fewer developers; it’s more productive ones.

QA and bug detection

Quality assurance has always been the patient cousin of innovation. AI gives it the sharp edge it deserved years ago. Intelligent test platforms now flag anomalies, predict potential failures, and write test scripts that adapt to changing builds.

These systems excel at catching what humans overlook: pattern-based bugs, performance drifts, or inconsistent API behavior. MVPs and pilot versions dramatically shorten release cycles and reduce rework.

Yet full automation remains a myth. AI in quality assurance cannot judge usability, compliance, or the emotional side of user experience. Human testers still decide what “good enough” means. The balance works when machines handle precision and humans handle context — a formula that defines sustainable quality in using AI in software development.

In short, AI helps teams complete tasks faster, manage complexity, and stay focused on the work that adds the most value. It simplifies the software development cycle, boosts developer experience, and strengthens collaboration among team members, but only when guided by expert hands and disciplined processes.

Related: AI implementation process.

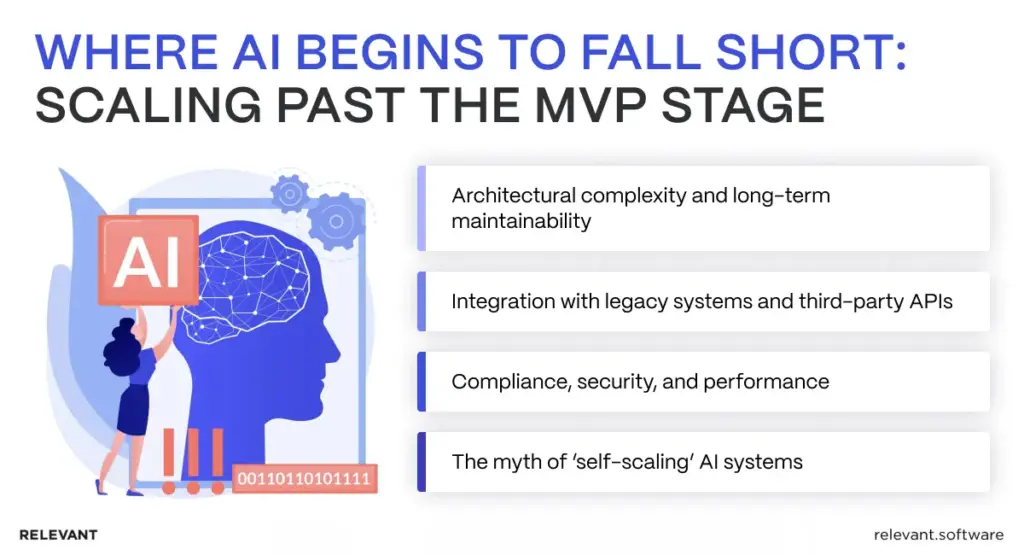

Where AI starts to struggle: scaling beyond MVP

Once an MVP faces real user traffic, the easy wins from AI start to disappear. A prototype that looked impressive in testing now runs into the real demands of scale, complex architecture, live integrations, and compliance reviews that no chatbot can fix instantly. This is where experience outperforms automation and where leaders must weigh benefits against the risks of using AI in software development, including model drift, unclear decision paths, cost creep, and security gaps. AI may help build the car, but human developers still have to design the road.

Architectural complexity and long-term maintainability

As traffic grows and new features pile up, every shortcut taken in the MVP phase starts to attract attention. Real scalability requires an architecture that balances performance, cost, and flexibility; something AI cannot yet reason through. While machine learning models can generate snippets, they fail to navigate trade-offs between distributed systems, databases, and deployment models.

Without seasoned engineers guiding software design, teams risk what many call “AI spaghetti code.” It runs, sometimes impressively fast, but debugging feels like digital archaeology. Complex tasks like dependency management, system integration, and version control demand judgment, not suggestion.

Sustainable scaling isn’t about writing more code. It’s about writing code that survives change. Human-led reviews ensure that what AI produces in seconds continues to function years later, maintaining software quality throughout the full development life cycle.

Integration with legacy systems and third-party APIs

Modern platforms operate in a complex environment where legacy databases, aging APIs, and unpredictable partner systems still shape daily work. Teams often expect AI to streamline these connections, yet real development tasks reveal many issues that automated tools cannot interpret. Old documentation includes vague natural language notes, versions drift quietly, and edge cases stay hidden until they break a workflow.

AI can assist with stubs, wrappers, and quick prototypes, but it cannot anticipate throttling rules or interpret protocols that predate current standards. This is why experience matters. Relevant Software engineers carefully review each integration path, check dependencies, and ensure the stability of the data flow before any deployment.

In real projects, AI strengthens the team by supporting analysis and surfacing technical patterns, while human oversight ensures that integration, security, and compliance remain intact. Automation proposes ideas. Expert judgment shapes the final result.

Compliance, security, and performance

When a product moves from concept to production, the rules change. Compliance and security stop being checkboxes; they become survival plans. AI can simulate encryption, but doesn’t interpret the intent behind “least privilege” or “privacy by design.” It lacks domain awareness of standards like HIPAA or GDPR.

Unchecked automation risks data exposure, flawed permissions, or misconfigured endpoints. That’s why human-led review and continuous deployment safeguards are essential. Teams track test results, evaluate key performance indicators, and compare production data to prevent silent failures.

AI can improve coverage and detect anomalies, but specialists validate findings, interpret risk, and document proof. In highly regulated sectors, that line between automation and accountability defines trust, and the impact of AI depends on how tightly it’s audited.

The myth of ‘self-scaling’ AI systems

A persistent myth in the future of software development claims that AI-built systems scale on their own. They do not. Code that supports ten users often fails at ten thousand. AI has no real sense of network latency, caching strategy, or load balancing. It produces code that appears correct in a narrow scenario rather than an architecture designed to endure growth and stress.

A more accurate analogy treats AI as assistance for the prototype, not as the architect of the entire production line. It can help outline services or suggest patterns, but it does not design the factory or coordinate the supply chain. Scalable software needs DevOps, observability, and adaptable delivery pipelines connected to continuous learning. Teams define typical workloads, measure real outcomes, and reshape architectures as usage evolves.

Automation increases developer productivity at the start of a project, yet sustained performance still depends on people who understand trade-offs, capacity limits, and infrastructure constraints. Engineers review logs, refine algorithms, and adjust resources. These activities keep systems stable long after the MVP loses its novelty.

AI remains a powerful accelerator for the future of software development. True scalability, however, is a discipline. It requires human judgment, fluency in platforms and programming languages, and durable processes that convert promising experiments into products that hold under real-world load.

The smart balance: human expertise + AI acceleration

After the first release, teams from top AI software development companies learn an important truth: the goal is not full automation but orchestration. The most effective products combine machine speed with human foresight. This is the real role of AI in software development: artificial intelligence accelerates the routine, while engineers keep the system stable, compliant, and meaningful. It is a partnership, not a replacement plan.

Augmentation, not automation

AI performs best when treated as a co-pilot, not a commander. It automates repetitive work, like generating test data, writing boilerplate code, or scanning for vulnerabilities, while humans steer product direction, architecture, and trade-offs.

At Relevant Software, this hybrid model shapes our delivery process. AI agents assist software engineers with estimations, AI-assisted prototyping, and early quality assurance, while architects and product owners guide on logic, compliance, and long-term scalability. The result is not fewer experts, but experts free from monotony who can select the right AI solutions for the job.

This mix turns speed into quality. AI cuts delays, specialists refine outcomes, and the two together create momentum that no standalone approach could achieve. In our projects, AI in software development works as the muscle; human judgment stays in the brain.

Predictive planning and continuous improvement

Even after launch, AI still helps. With expert oversight, it strengthens DevOps, analytics, and continuous improvement. Predictive systems forecast load spikes, flag weak points in pipelines, and automate selective code reviews. Guided by machine learning, teams move from reaction to anticipation. That shift supports better problem-solving and gives delivery managers early risk signals that engineers can validate and act on.

In Relevant Software’s projects, AI models support our delivery managers with early risk signals and optimization suggestions, while our engineers validate and implement those insights. The loop becomes smarter with each iteration, combining precision with adaptability.

The ROI of knowing where to stop

Knowing when not to use AI applications in product development is as strategic as knowing where to apply them. The real return on investment comes from restraint, directing AI toward measurable speed or insight, and switching to human expertise when judgment, context, or creativity becomes decisive.

Companies that treat AI as a force multiplier, not a miracle, see faster validation and cleaner code without costly rewrites later. It’s the business advantage of precision over automation.

As we often tell Relevant Software clients, AI in software development accelerates progress up to a point. Beyond that point, human intuition, domain expertise, and discipline deliver the real scale. The smartest teams don’t chase full automation; they master the balance.

How we’ve used AI for our clients: our success cases

The best way to understand where AI delivers real value is to see it in motion. In healthcare, fintech, and digital services, our Relevant Software engineers apply AI where it creates real value, such as faster insights, higher-quality data, and stronger user experiences. Here are two AI use cases in software development that show how focused AI consulting services transform results without overreaching.

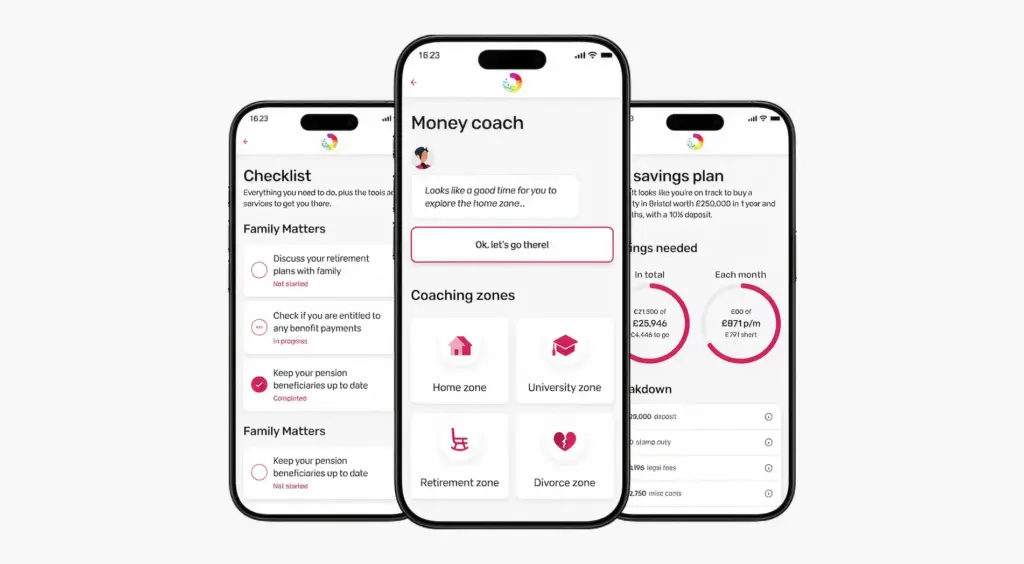

Life Moments – AI coaching agent for financial wellness

Life Moments, a UK-based fintech, wanted to help users make better financial decisions through personal digital coaching. Our team developed an AI coaching agent that understands user inputs, interprets financial behavior, and suggests personalized actions in real time.

The system applies NLP and sentiment analysis to tailor conversations, helping users set savings goals and manage milestones without human advisors. Since launch, engagement and session duration increased sharply, validating the MVP within weeks.

Here, AI handled personalization at scale, while the human-designed logic kept advice compliant and responsible, a clear example of outsourcing AI development serving human intent rather than substituting it.

AstraZeneca – AI for medical document structuring

AstraZeneca needed a way to process thousands of medical reports containing complex data, inconsistent terminology, and unstructured formats. Our engineers built an AI-powered document structuring system that recognizes context, extracts clinical entities, and organizes them into standardized formats for downstream analytics.

The platform now processes over 350,000 CRM records per week, reducing manual work by more than 20 hours. AI didn’t replace medical analysts — it equipped them to review data faster, spot errors earlier, and act on insights rather than sorting through spreadsheets. This project proved that generative AI in software development adds precision when paired with domain expertise and clear process control.

Final thoughts: AI is the spark, not the engine

AI has reshaped how teams imagine, design, and deliver digital products. It cuts through early uncertainty, turns ideas into MVPs faster, and accelerates learning before full investment. But it doesn’t scale products on its own. Once complexity, compliance, and real-world variability appear, human expertise becomes the anchor again.

The takeaway is simple: treat AI as the spark that ignites innovation, not the engine that drives it. Use it to shorten the path to validation, guide experiments, and uncover insights faster than any manual team could. Then hand the wheel back to experienced engineers who can scale those ideas safely and sustainably.

At Relevant Software, we use AI to make software development faster, smarter, and more predictable, while preserving the human expertise that drives successful delivery. Our approach blends automation with craftsmanship, since the future belongs not to teams that automate everything, but to those that know when to pause and apply judgment. This balance is one of the reasons our work is consistently recognized on Clutch, where clients value outcomes that hold up under real-world pressure.

If you’re planning to integrate AI into your next digital initiative or want to hire AI engineers with a solid background, contact us!

Hand-selected developers to fit your needs at scale! Let’s build a first-class custom product together.